TL;DR:The calm before the cyberstorm is over. Enterprise ecosystems are under siege — not by hackers alone, but by complexity, latency, and blind spots in their observability stacks. Agentic AI, capable of machine-speed action and reasoning, is emerging as the only viable shield. But beware: deploying it wrong could be just as dangerous as not deploying it at all.

The Hidden Crisis in Digital Resilience

Most enterprises don’t know it yet, but they’re sitting on a ticking time bomb. Logs are being ignored. Threats are hiding in plain sight. Downtime is lurking one click away.

Agentic AI promises to fix all that — but not without risks of its own.

1. Root-Cause or Root-Chaos?

Agentic AI can diagnose issues across siloed systems in minutes — a task that used to take engineers hours. Sounds ideal, until you realize: if not carefully controlled, a hallucinating AI could trigger unnecessary shutdowns, misdiagnose incidents, or initiate false-positive remediations.

Even scarier? It could completely miss the real threat while confidently executing the wrong fix.

2. Are You Catching Threats — or Are They Catching You?

Zero-day exploits and insider breaches are no longer rare — they’re constant. Relying on human SOC analysts alone is a losing battle. But if you deploy AI agents without strict guardrails, they might auto-escalate false alarms or worse, ignore subtle yet catastrophic threats hiding in your encrypted traffic.

3. Real-Time Decisions — Real-Time Consequences

Agentic AI doesn’t sleep. It evaluates user behavior, device signals, and network patterns continuously. But giving an autonomous system that much control without human oversight is like handing the steering wheel of your company to a stranger — blindfolded.

One bad inference, and it’s your brand, data, and boardroom reputation on the line.

Agentic AI Isn’t Optional — But It Isn’t Foolproof

1. Humans Must Stay in the Loop (Or You’re Toast)

Fully-automated workflows sound efficient — until they compound hallucinations, trigger cascading failures, or silently reroute security alerts. Enterprises need real humans to supervise, intervene, and retrain agents continuously — or risk letting the AI drift into dangerous decisions.

2. General-Purpose AI Can’t Be Trusted with Security

OpenAI’s o3 and o4-mini models hallucinate 51% to 79% of the time on reasoning tasks. Now imagine them misinterpreting a brute-force attack as a software update. That’s the cost of using general models instead of purpose-built security agents trained on your environment and augmented with domain-specific data via RAG.

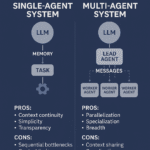

3. Protocol Chaos Is Coming

Without clear standards, integrating multiple agents could turn your IT ecosystem into a protocol battleground. Inter-agent miscommunication could cause duplicated tasks, partial remediation, or—worse—contradictory responses to live threats.

Only with new protocols like:

- MCP – to anchor models in reliable context

- A2A – to avoid rogue agents clashing

- AGNTCY – to orchestrate it all with transparency

…can you avoid digital entropy.

4. Autonomy Without Governance = Instant Exposure

Traditional IAM frameworks are too slow for AI agents. These systems don’t account for autonomous actors making thousands of decisions per hour. Without rethinking access governance, audits, and privacy at agent-scale, your enterprise will be an open door for AI-induced security breaches.

Splunk’s AI Stack: Your Lifeline in the Chaos

As agentic AI becomes the norm, Splunk, now under Cisco, is embedding AI across its full security and observability platform — not just for speed, but for safety.

By unifying operational data, enforcing tight governance, and fine-tuning agentic behaviors, Splunk offers a lifeline for enterprises that don’t want to gamble on blind automation.

Visit splunk.com/ai — before your next alert turns into your next crisis.